A method, procedure, process, or rule used in a particular field is typically defined as practice for that field. In last article, we discovered about the 10 guiding principles that drive the DesOps. No wonder the practices involved in DesOps, loud the same principles to the core. Note that we are still discussing the culture driven by DesOps / DesignOps, that is typically fuelled by these practices.

Here are the broad practices that drive the DesOps philosophies:

1. Design Thinking

This practice ensures that we employ the creative problem solving, the typical methodologies and tools of Design thinking. Here are some quick notes and list of methodologies and tools used in IBM version of it which anyway follows the fundamental principles of Design Thinking https://medium.com/eunoia-i-o/quick-guide-notes-on-the-ibm-design-thinking-78490d7433dd.

The typical tools of Design Thinking, such as Stakeholders map, Experience maps (As Isand To Be), Personae, Roadmap, MVP, Kano Modelling, Story Boarding, Priority Grid etc. are coupled with continuous practices defined in the following to implement the Continuous Loop of the Design Thinking practice that holds the DesOps philosophies.

2. User-centred Design (UCD) and Usability Design

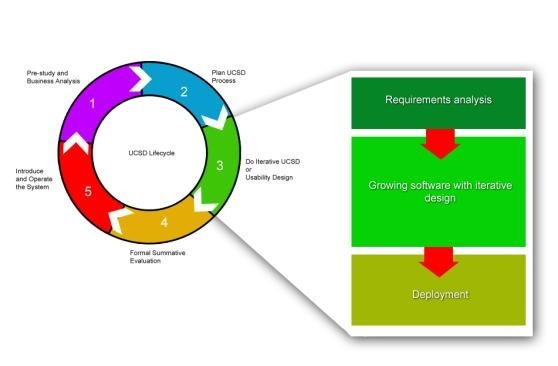

Users (both the typical user / persona from UX angle and the segment from marketing/business context ) are at the core of DesOps. Any design solution generated fundamentally is an advocacy of the user needs and tries to direct the business goals to build upon this. The business goals are also in such cases are market specific and are based on the pulse of the segments driven by the user needs. You can have a quick note on UCD and usability-design here https://www.linkedin.com/pulse/20140702070557-9377042-usability-design-and-user-centered-design-ucd/. As UCD or Usability design focuses on the Iterative Design approach of User Centered System Design (UCSD) process this fundamentally contributes to DesOps goals.

(Fig – Source: UX Simplified: Models & Methodologies, 2014, ISBN 1500499587 )

As UCD supports growing product through iterative design that is also fuelled by all 3 models of design which contribute to typical Design Thinking as well as Lean UX models, namely:

- Cooperative Design: This involves designers and users on an equal footing.

- Participatory Design (PD): Inspired by Cooperative Design, focusing on the participation of users

- Contextual Design: “Customer-Centered Design” in the actual context, including some ideas from Participatory Design.

And here are the steps we use while implementing UCD , irrespective of the above models we follow: All these UCD models involve more or less a set of activities grouped into the following steps mentioned below:

- STEP 1 – Planning: in this stage the UCD process is planned and if needed customized. It involves understanding the business needs and setting up the goals and objectives of the UX activities. Also forming the right team for the UX needs and if needed hiring specialties fall into this step.

- STEP 2 – User data collection and analysis: This step involves data collection through different applicable methodologies such as user interviews, developing personas, conducting scenarios, use-cases and user stories analysis, setting up measurable goals.

- STEP 3 – Designing and Prototyping: This involves activities like card sorting, conducting IA, wireframing and developing prototyping.

- STEP 4 – Content writing: this involves content refinement and writing for web and similar activities.

- STEP 5 – Usability testing: This involves is a set of activities of conducting tests and heuristic evaluations and reporting to allow refinement of the product. However, Usability Testing can have its set of steps involving similar activities such as planning , Team forming, testing, review and data analysis and reporting.

And you will see all these naturally fall into the places while any DesOps is implemented.

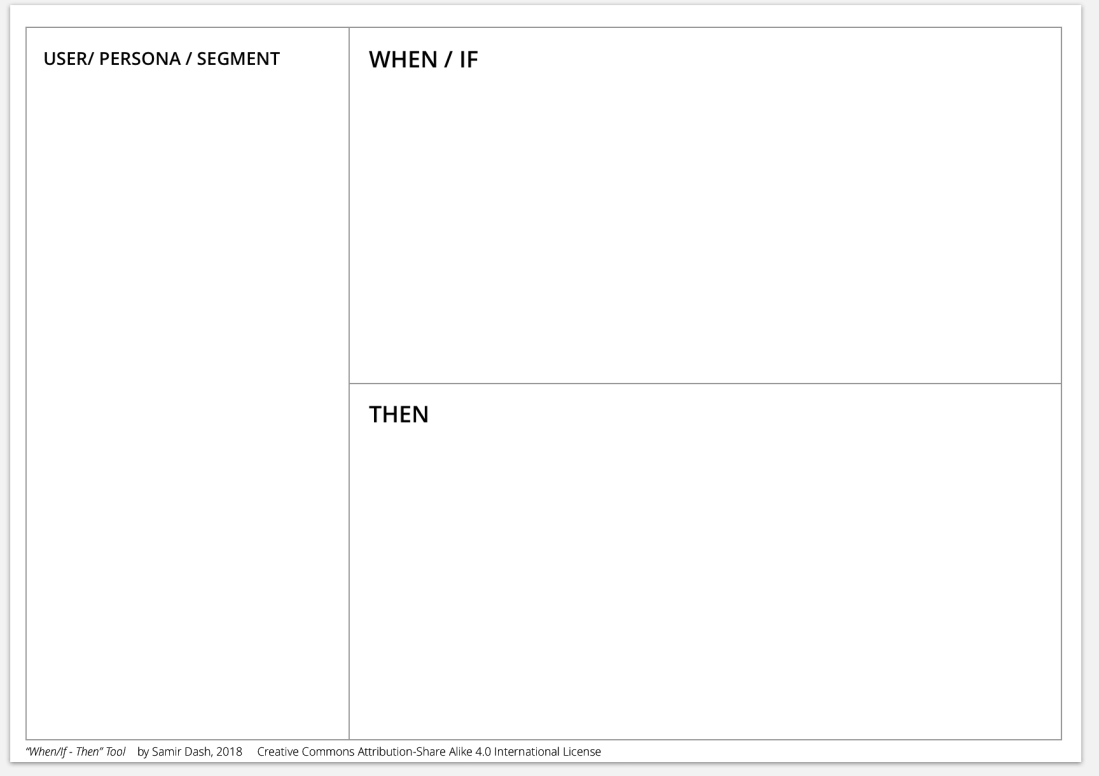

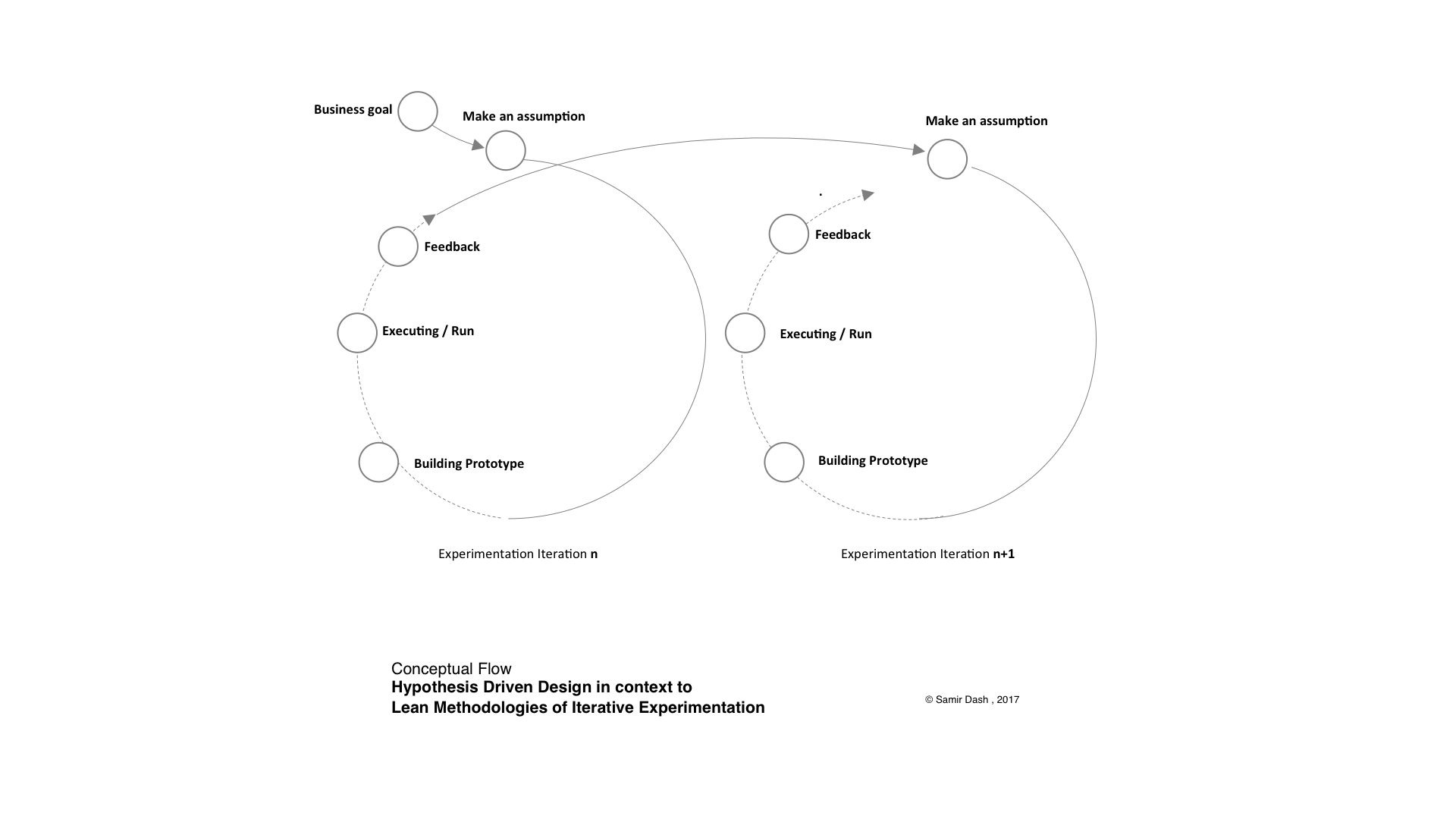

3. Hypothesis-Driven Design/Development (HDD) & Data-driven Decisions making

The DesOps story remains incomplete without referring to one of its key practices that are Hypothesis-driven – development (HDD), which certainly contributes to the service design like DesOps, that brings possibilities of changes to inculcate design thinking, innovation and organizational changes. It also promotes the lifecycle methods and adjusts them to ensure that integrated work-flow and work-culture is established that can make the best use of data-driven decision making by running multiple early-stage experiments (synonymous with what we are trying to achieve through continuous feedback loop and prototyping) and gathering insights from their outcomes (and not just output!). Another interesting thing is that this advocates the use of UCD approaches as it focuses on making an assumption, running experimenting and validating them with measurable data, and thereby taking some action based on the same.

Will elaborate on HDD driven methodologies in context to DesOps as we move in this series of articles.

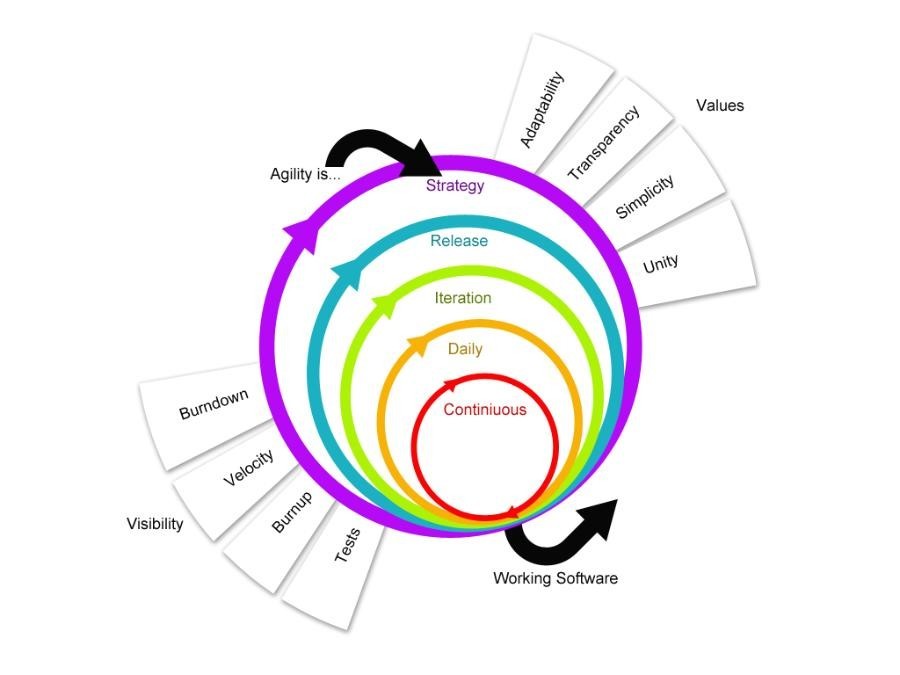

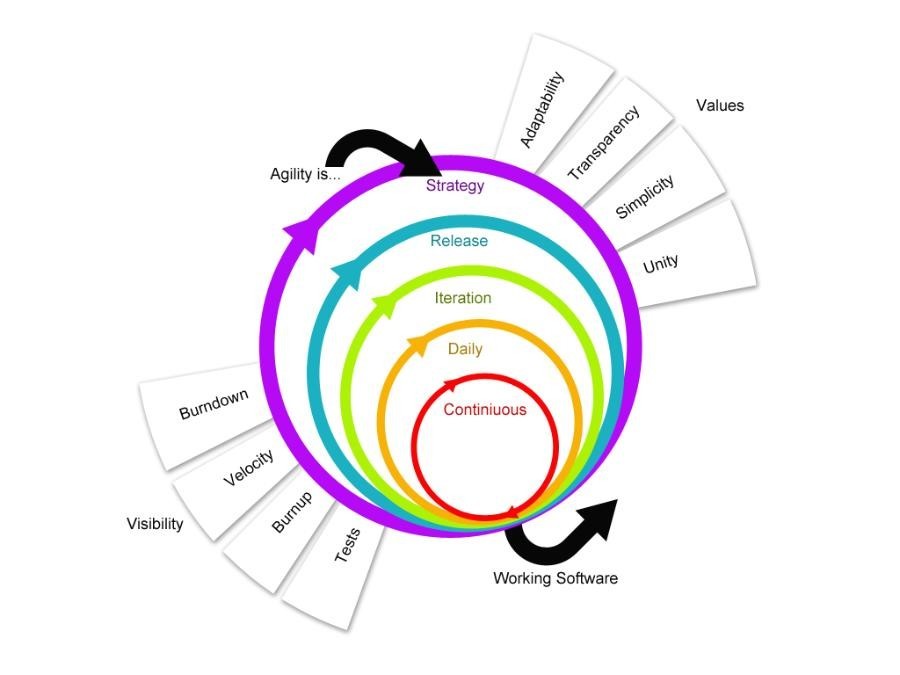

4. Agile / Iterative Life Cycle

There are several challenges in integrating UX design and related activities into a typical agile software development lifecycle process. The most common problem is typically “ finding a balance between up-front interaction design and integrating interaction design with iterative coding with the aim of delivering working software instead of early design concepts”. This happens mostly because typical pure SDLC approaches primarily aim at “efficient coding tactics together with project management and team organization instead of usability engineering”.As Agile is more “a way of thinking about creating software products’ rather than being a specific process or methodology hints at the challenges of UX integration into it as integrating user research and UX design with agile, is itself an “agile antipattern”.The very idea of SDLC is a process for developing software, traditionally never kept the “user” into a focus, or event kept any scope for methodologies that try to bring any component that is not considered as a native ingredient of the process of creating a software product. The focus was always the “cost, scope, and schedule” that drive any traditional SDLC models including Agile. And sure enough, this typically gives rise to the challenges for UX integration into any SDLC as project managers never try to upset the balance of these three by reducing costs, tightening deadlines, and adding features in the specification. To know more about the typical challenges we face while implementing design / UX into Agile SDLC read my earlier article here: https://www.linkedin.com/pulse/20140706143027-9377042-challenges-in-ux-integration-with-different-sdlc-models/

(Fig – Source: UX Simplified: Models & Methodologies, 2014, ISBN 1500499587 )

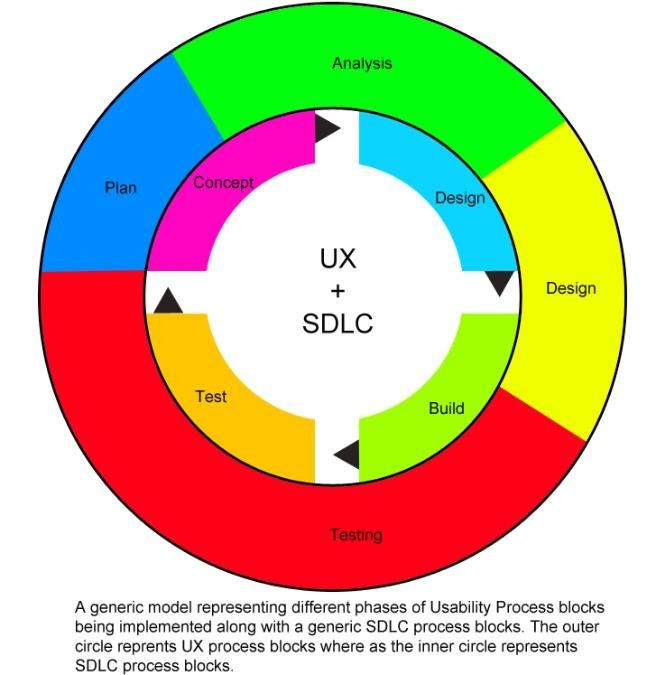

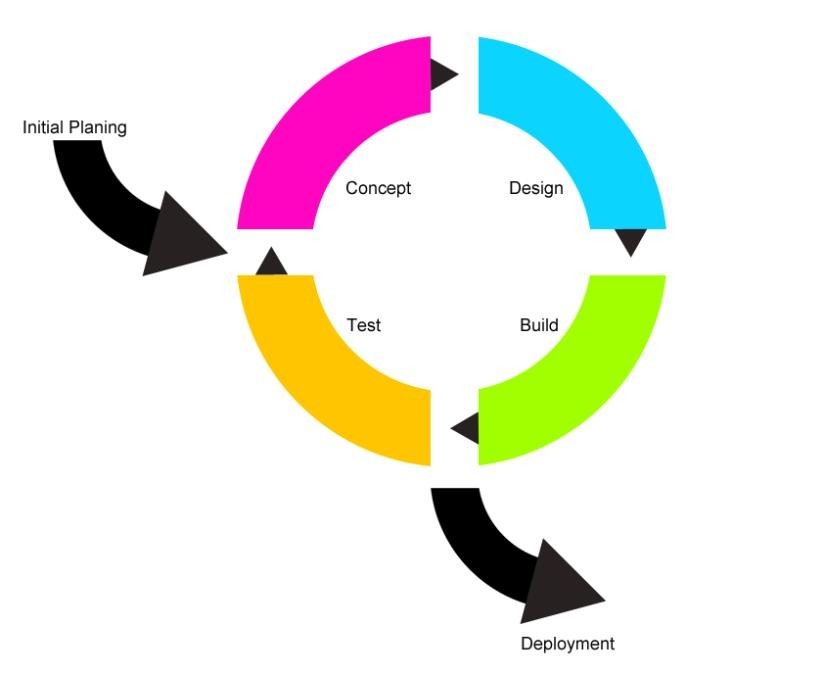

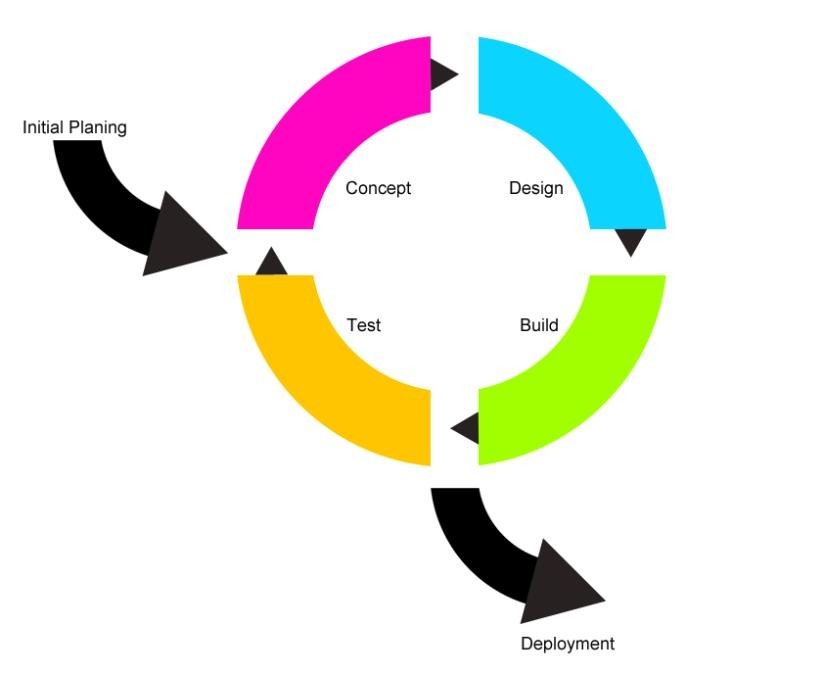

However, there ways to mend this gaps between process driven life cycle models such as Agile model — one of such is to implement usability design model (we discussed that as a practice of DesOps above). Usability process supplements to any software development life cycle at various stages, as is not a complete product development process as it does not output the final product at the end of the process cycle. One such solution is reflected in below diagram :

(Fig – Source: UX Simplified: Models & Methodologies, 2014, ISBN 1500499587 )

And it is interesting to see that each cycle in such solution is actually contributing to a continuous cycle of Conceptualize – Design – Build – Test that aligns nicely with DesOpsprinciples and other practices.

5. Lean UX Approach

The Lean UX practice focuses on the Lean philosophies that focus primarily on reducing waste from the process and provide ways to simplify and expedite the delivery keeping the quality intact or enhanced. Interestingly the Lean UX is based on the 3 foundations which are also part of the DesOps practices list we are discussing:

- Design Thinking: This foundation upholds the concept that “every aspect of a business can be approached with design methods” and gives “designers permission and precedent to work beyond their typical boundaries”.

- Agile Software Development: Core values of Agile are the key to Lean UX.

- Lean Startup method: Lean Startup uses a feedback loop called “build-measure-learn” to minimize project risk and gets teams building quickly and learning quickly

No practice used in Lean UX is something new. Rather it is “built from well-understood UX practices”. Many of the techniques used over the time in various UX process and have the practical usability even today have been packaged properly in Lean UX. So the following foundation pillars of this also supports DesOps as inherited from this practice:

- Cross-Functional Teams: Specialists from various disciplines come together to form a cross-functional team to create the product. Such a team typically consists of Software engineering, product management, interaction design, visual design, content strategy, marketing, and quality assurance (QA).

- Small, Dedicated, Collocated: Keep your teams small—no more than 10 total core people as keeping small team has the benefit of small teams comes down to three words: communication, focus, and camaraderie. It is easier to manage the smaller team as keeping track of status report, change management and learning.

- Progress = Outcomes, Not Output: The focus should be on business goals which are typically are the “outcomes”, rather than the output product/system or service.

- Problem-Focused Teams:“A problem-focused team is one that has been assigned a business problem to solve, as opposed to a set of features to implement”.

- Removing Waste: This is one of the key ingredients of Lean UX which is focused on “removal of anything that doesn’t lead to the ultimate goal” so that the team resource can be utilized properly.

- Small Batch Size: Lean UX focuses on “notion to keep inventory low and quality high”.

- Continuous Discovery: “Regular customer conversations provide frequent opportunities for validating new product ideas”

- GOOB: The New User-Centricity: GOOB stands for “getting out of the building” — meeting-room debates about user needs won’t be settled conclusively within your office. Instead, the answers lie out in the marketplace, outside of your building.

- Shared Understanding: The more a team collectively understands what it’s doing and why, the less it has to depend on secondhand reports and detailed documents to continue its work.

- Anti-Pattern: Rock-stars, Gurus, and Ninjas: Team cohesion breaks down when you add individuals with large egos who are determined to stand out and be stars. So more efforts should on team collaboration.

- Externalizing Your Work: “Externalizing gets ideas out of teammates’ heads and on to the wall, allowing everyone to see where the team stands”.

- Making over Analysis: “There is more value in creating the first version of an idea than spending half a day debating its merits in a conference room”.

- Learning over Growth: “Lean UX favours a focus on learning first and scaling second”.

- Permission to Fail: “Lean UX teams need to experiment with ideas. Most of these ideas will fail.The team must be safe to fail if they are to be successful”.

- Getting Out of the Deliverables Business: “The team’s focus should be on learning which features have the biggest impact on the their customers. The artefacts the team uses to gain that knowledge are irrelevant.”

You can read more in one of my articles here:https://www.linkedin.com/pulse/20140710010240-9377042-lean-ux-another-agile-ux/?

6. Fail-Fast through Prototyping

Typically a Fail-Fast is about immediately reporting any condition that is likely to indicate a failure. Also, Fail-Fast allows gathering early stage feedback that serves as an input for the continuous UCD model which helps bring up solutions to the design issues using such input and thereby minimizes the risk of product failure in the hand of users or in the market. This is also a philosophy that aligns to the Lean Startup methodology and accelerates innovation as it encourages taking early stage risk. Typically the startup cultures undertake bold experiments to determine the long-term viability of a product or strategy, rather than proceeding cautiously and investing years in a doomed approach. In service design, this helps to improve the processes to make use of systems that support Lean methodologies and model. The great part is that this in DesOps while getting combined with UCD processes, provides options to run short and quick UCD iterative cycles of Think – Make – Break kind of model.

(Fig – Source: UX Simplified: Models & Methodologies, 2014, ISBN 1500499587 )

Prototype plays a crucial role in UCD models to achieve Fail-Fast and thereby ensuring early feedback on the design is received that can contribute to the evolution of the product. Different fidelity of prototypes is used in order to ensure that the target experience can be tested.

7. Continuous Discovery

Continuous Discovery is primaily involved with the conceptualization stage of the product lifecycle. This practice mostly is driven factors like

- User focus: The goals of the activity, the work domain or context of use, the users’ goals, tasks and needs should control the development.

- Active user involvement: Representative users should actively participate, early and continuously throughout the entire development process and throughout the system life cycle.

- Simple design representations: The design must be represented in such ways that it can be easily understood by users and all other stakeholders.

- Explicit and conscious design activities: The development process should contain dedicated design activities.

This practice, however, is not just limited to conceptualization stage, but organically is part of the evaluation and build stages as the outcomes from such stages of life cycle, it gets the input of feedbacks and evaluation results which aid in the discovery of the solution through the design process.

8. Continuous builds and delivery

This practice focuses on continuous design delivery that ensures that the DesOps sustains the lifecycle and supports iterative UCD cycles. This involves the process that supports the design of the solution which thereby contributing to the system development that is iterative and incremental. The early part of life cycles involving such practice typically gains life from prototyping which is used to visualize and evaluate ideas and design solutions in cooperation with the users.

So the factors in this practices are :

- Evolutionary systems development: The systems development should be both iterative and incremental.

- Prototyping: Early and continuously, prototypes should be used to visualize and evaluate ideas and design solutions in cooperation with the users.

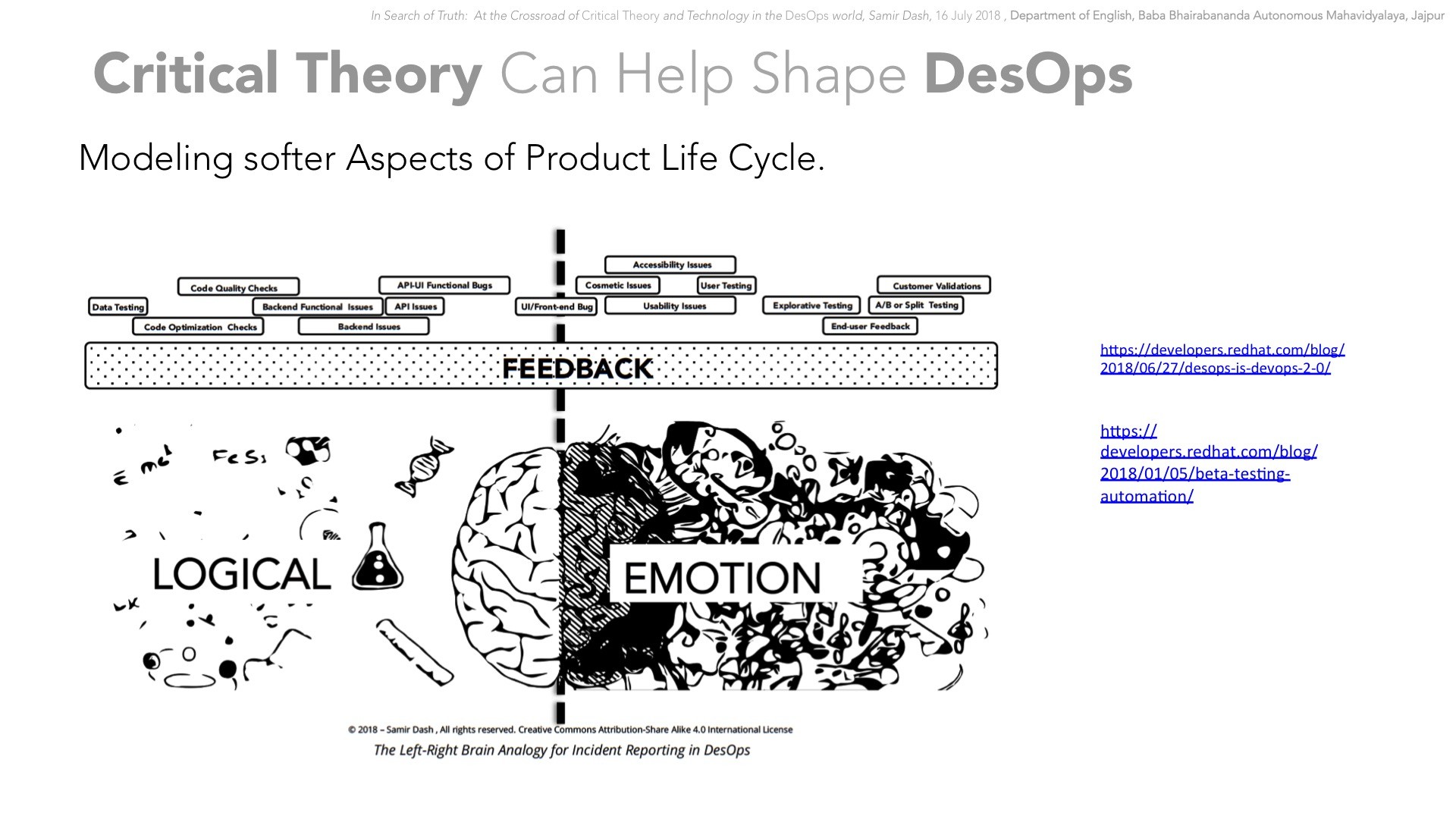

9. Integrated & incremental testing

Evaluation and getting the feedback from all stages of the lifecycle is key to any DesOpsimplementation, therefore integrated testing (including usability testing) in an incremental fashion is what that plays a stronger role among all the practices. This actually draws from the UCD models running a User Centered System Design (UCSD) approach. As UCD experts help in benchmarking usability tests popularly known as “summative evaluations” that evaluates the performance of the system /product developed on several grounds. The metrics of this test is typically based on the “error rate for users as they use the system”, the “time it takes to attain proficiency performing a task”, and the “time it takes to perform a task once proficiency has been attained”. So the factor that drives the practice is —

- Evaluate use in context: Baseline usability goals and design criteria should control the development.

Note that the key here is that all the testing should support evaluations in context. In the real context of use, getting the data is what makes this effective and thereby making DesOpsmore fruitful.

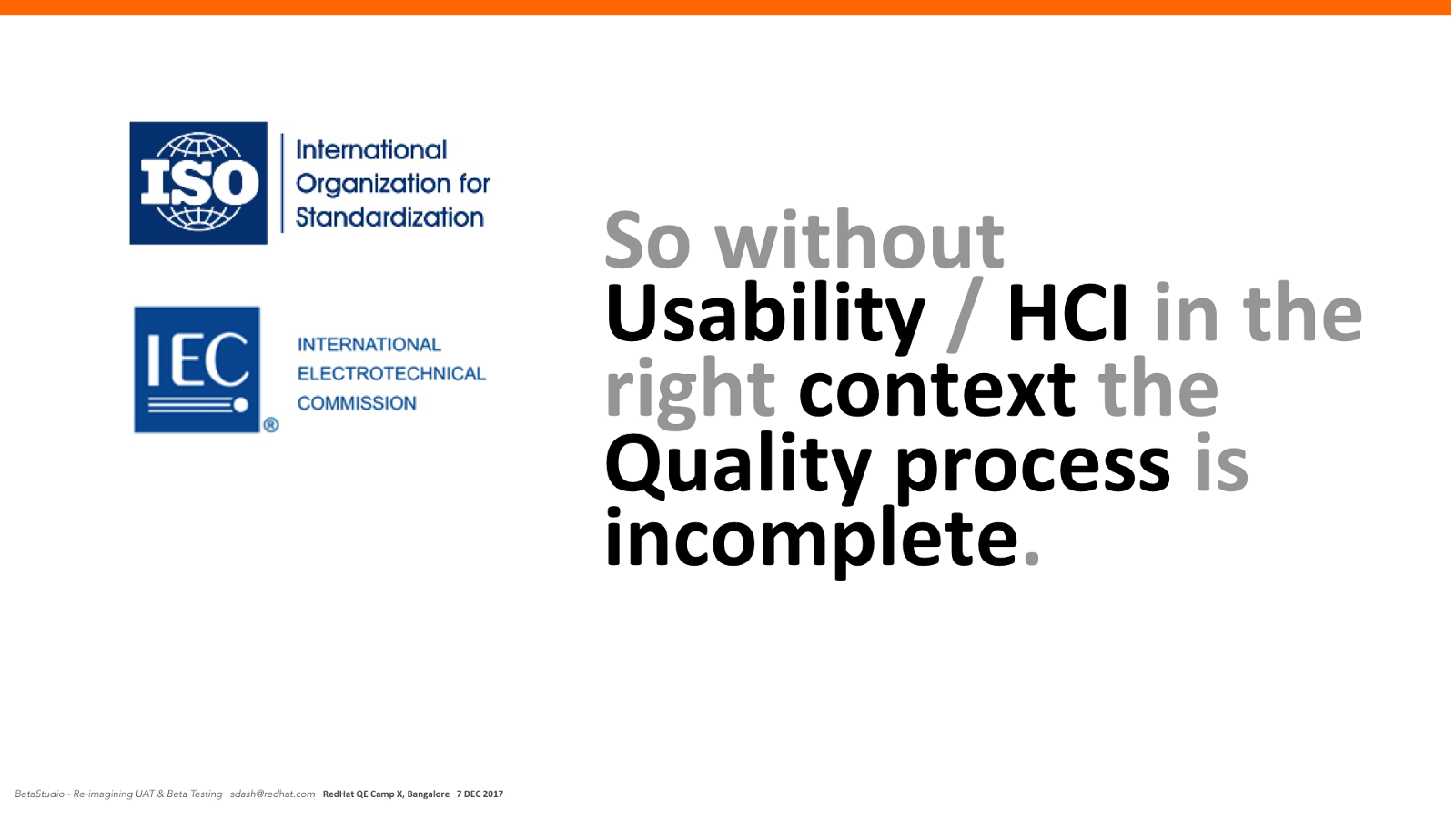

(Fig – Source: Re-imagining Beta Testing in the Ever-Changing World of Automationhttps://medium.com/eunoia-i-o/re-imagining-beta-testing-in-the-ever-changing-world-of-automation-3579ac418007 )

The ISO standard also defines Quality process where context plays the major role. And interestingly Usability testing and HCI aspects are all driven by context. Read one of of my articles on how context plays a critical role in testing and usability, which also narrates an experiment named BetaStudio here – https://medium.com/eunoia-i-o/re-imagining-beta-testing-in-the-ever-changing-world-of-automation-3579ac418007 .

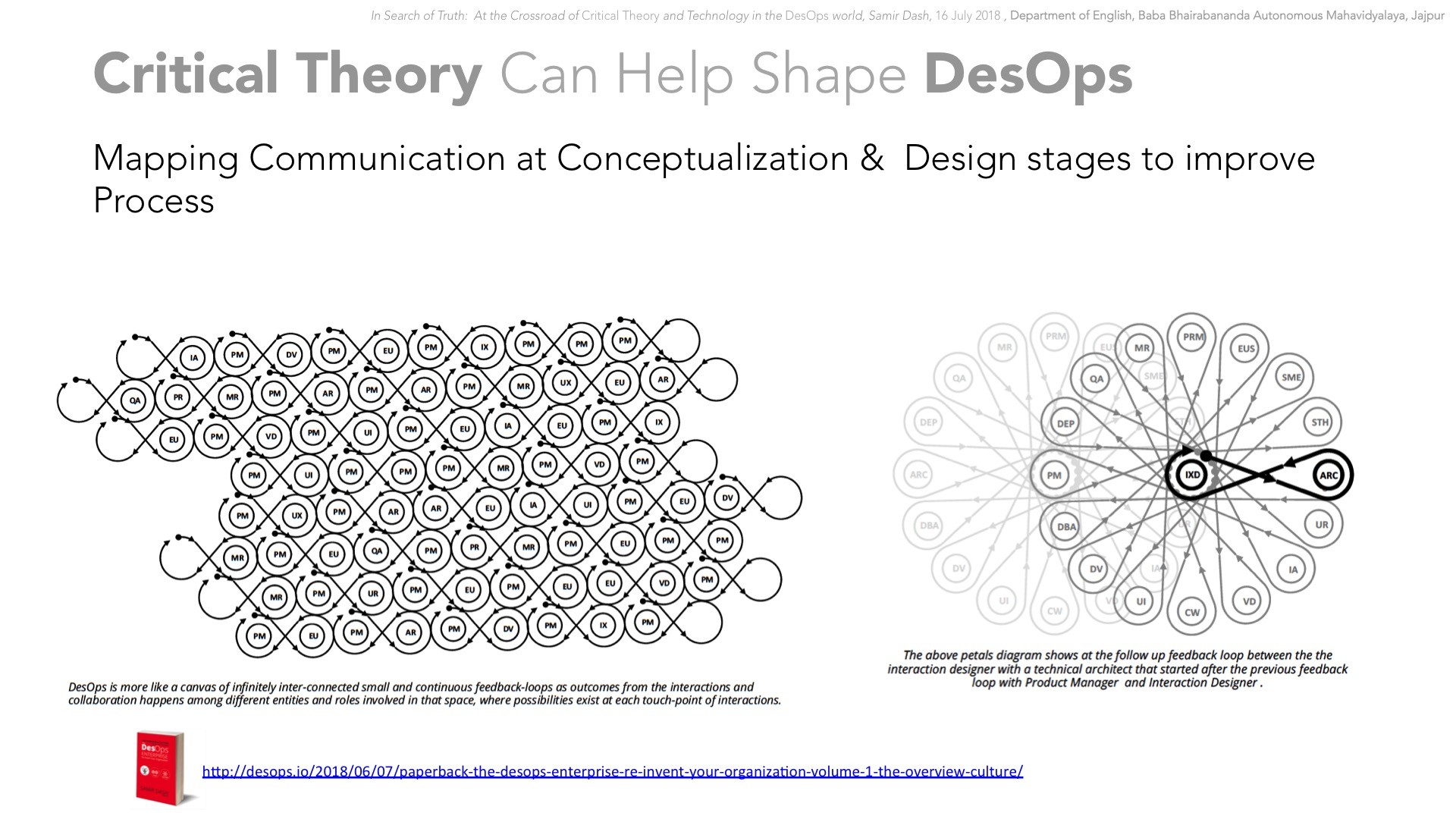

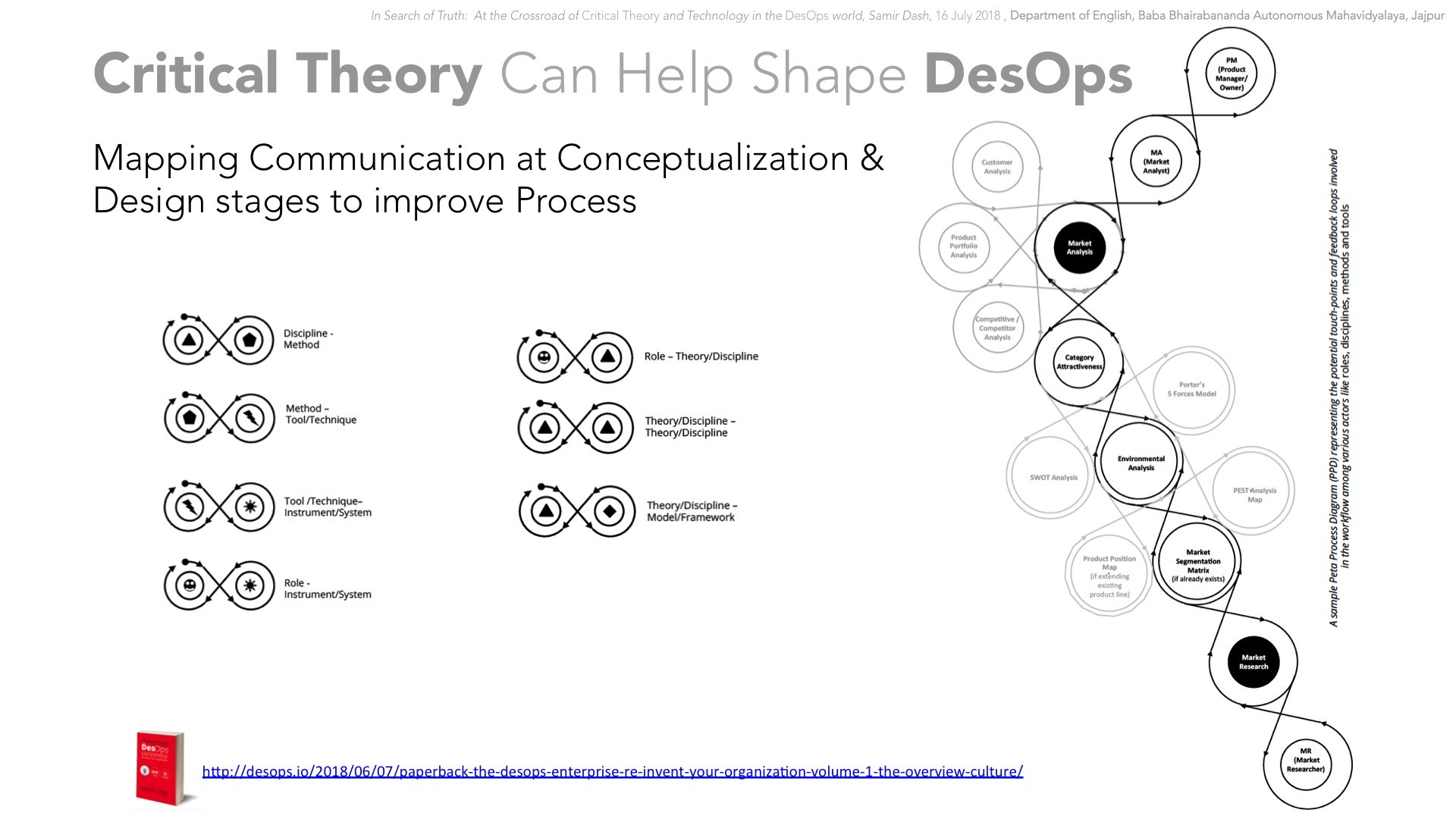

10. Service Design on an Integrated Feedback-Loop Model

The integrated feedback loop is actually more than getting testing reports. This practice ensures that the feedback flows from any point to any point as needed, may it be from stakeholder to Designers, or End-Users to Developer, Testers to Designers or in any path that flows from one persona to the other. Also, this includes the service design that helps to implement the DesOps which ensures the information, as well as the feedback, is flowing seamlessly even including from and to the systems and different roles. This certainly uses service design employing recent technologies like Automation, Machine Learning and Artificial Intelligence etc.

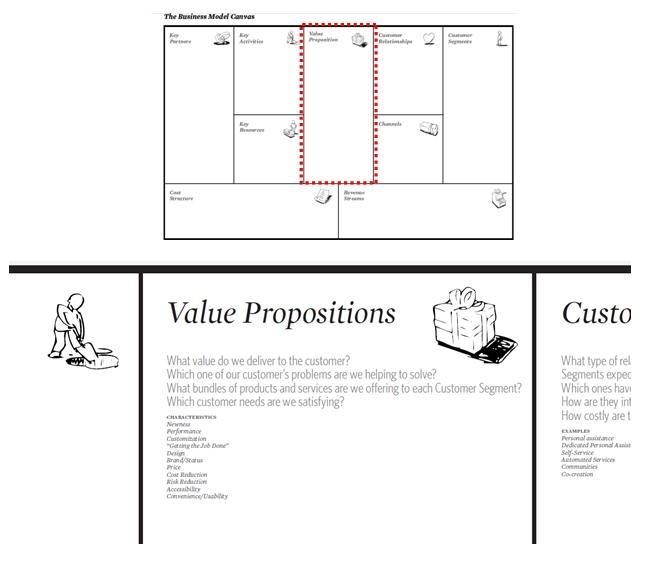

Hope this article was helpful. Keep tuning in for the next parts of this article series. Before moving on to next points on Culture of DesOps, we will be looking into a Business Model Canvas and try to see how DesOps fits in. Do share the words.

(c) 2018, Samir Dash. All rights reserved.